The $250 Billion Shock: How Google Just Shattered Nvidia's AI Monopoly—And Why It Matters for Trading

TL;DR: In 24 hours, Google's tensor processing units (TPUs) erased $250 billion from Nvidia's market value, signalling a seismic shift in AI infrastructure. Meta's reported move toward TPUs isn't just tech news—it's a wake-up call for anyone building AI-powered trading systems. Here's why this matters, what the numbers show, and what it means for the future of algorithmic trading.

The Moment Everything Changed

On November 25, 2025, something remarkable happened. While the broader market celebrated gains, Nvidia—the $4 trillion AI chipmaker that has dominated every AI conversation for the past three years—got hammered.

The trigger? A report from The Information revealing that Meta Platforms is in advanced talks to spend billions on Google's competing AI chips instead of Nvidia's.

Within hours:

- Nvidia stock fell 4%, erasing approximately $250 billion in market value

- Alphabet stock surged 4%, hitting a historic $4 trillion valuation

- Broadcom stock jumped 11% (it manufactures Google's TPUs)

Wall Street wasn't debating whether TPUs are "interesting." It was pricing in a fundamental shift in the AI infrastructure landscape.

For the first time since the AI boom began, Nvidia's stranglehold on the market was being questioned in real time by the market itself.

Why This Matters: The Numbers Behind the Story

Nvidia's Iron Grip (Is Loosening)

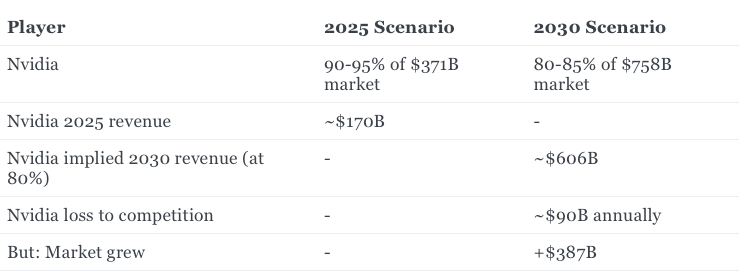

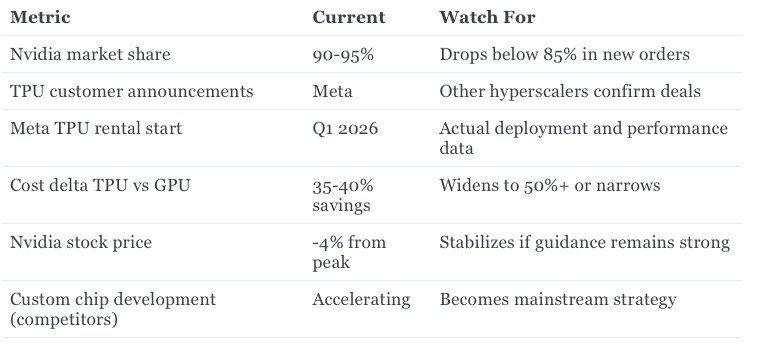

Let's start with the undisputed fact: Nvidia commands 90-95% of the AI accelerator market. That dominance has been absolute.

But here's what changed: That market is no longer one market anymore. It's fracturing.

Meta's scale makes this real.

Meta is spending up to $72 billion on AI infrastructure in 2025 alone—more than many countries' GDP. According to Morgan Stanley and Bank of America estimates, hyperscalers (Meta, Microsoft, Alphabet, Amazon, and others) will spend approximately $3 trillion on data centers through 2028.

If Meta—even partially—shifts from Nvidia to TPUs, it's not a minor account loss. It's a structural shift. The math:

- Meta's AI budget: $72 billion (2025)

- Projected 2026 spend: "Notably larger" than $72B (likely $90-100B+)

- TPU potential capture from Meta: Even 20% represents $14-20 billion in diverted revenue

For context, that's roughly 10% of Nvidia's annual revenue being directly threatened—not as speculation, but as a deal in "advanced talks."

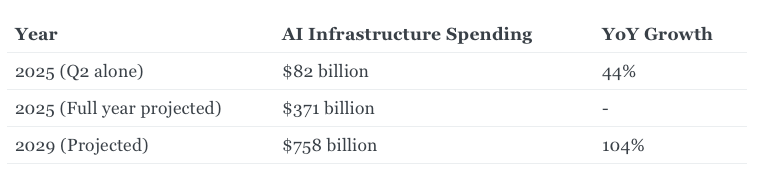

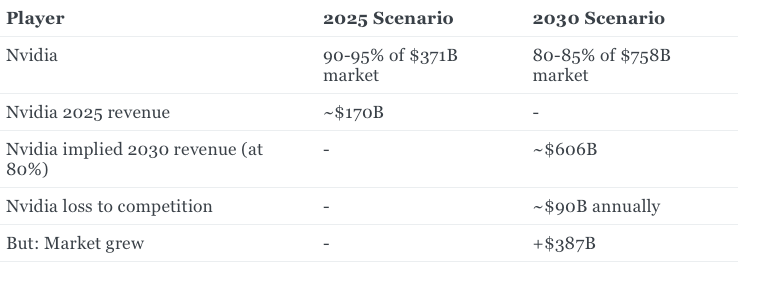

The AI Infrastructure Gold Rush

But the real story isn't just about Nvidia losing Meta. It's about the explosion of AI infrastructure spending itself:

This is a $387 billion market expansion in four years.

And here's the critical insight: Competition drives down costs.

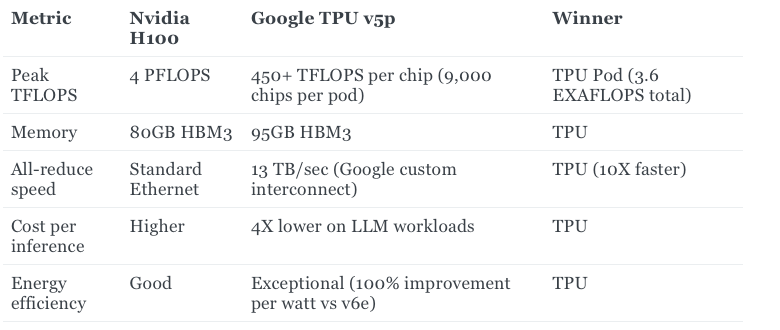

TPUs already show significant efficiency advantages over Nvidia GPUs on specific workloads:

- 60-65% more efficient than Nvidia H100 GPUs for certain AI tasks

- 4X better performance per dollar on large language model inference (LLM training)

- 5X faster training speed for dynamic model workloads compared to some GPU setups

- 1.4X better performance-per-dollar on specific use cases

These aren't theoretical claims. They're backed by real deployments:

- Gemini 3, Google's latest frontier-scale AI model, was trained entirely on TPUs

- Anthropic committed to purchasing 1 million additional TPU units

- Midjourney achieved a 65% cost reduction in inference after migrating from GPUs to TPUs

- Cohere saw 3X throughput improvements using TPUs

Wall Street's Message

The market's reaction—not the headlines, but the actual stock movements—reveals what professional investors think:

This isn't hype. This is a genuine competitive threat.

Alphabet's 4% gain wasn't speculation. It was repricing. Nvidia's 4% decline wasn't panic. It was de-risking.

Broadcom's 11% jump confirmed it: TPU demand is real enough that the supply chain is benefiting.

Nvidia might "lose" share, but the expanding market means absolute revenue still grows. That's why smart traders are asking: This is a negative for Nvidia, but is it negative enough to justify a sustained 4% decline?

Wall Street's answer: No, not in the long term. But yes, in the near term, as uncertainty reprices the stock.

The Technical Reality: Why TPUs Work

Nvidia's standard response—delivered via public statement—was: "We're a generation ahead of the industry."

That's defensible on general-purpose AI. But it misses the point.

TPUs are ASICs (Application-Specific Integrated Circuits). They're optimized for one job: training and running large language models.

Think of it like this: Nvidia GPUs are Swiss Army knives. Incredibly versatile, can do anything, deployed everywhere. TPUs are scalpels. They do one thing—matrix multiplication for neural networks—better than anything else.

For Meta's specific use case (training and serving large AI models), TPUs are the sharper tool:

The Deal Timeline: Why 2026-2027 Matter

This isn't some distant possibility. Here's the actual deal structure that's reportedly in play:

2026: Meta begins renting TPUs from Google Cloud

2027: Meta starts purchasing TPUs outright for its own data centers

Why the phased approach? It's smart risk management. Meta tests the waters with cloud rentals, validates performance and reliability, then commits to buying. If it goes well, this becomes a permanent shift. If not, Meta can walk away having rented rather than invested billions.

But here's why Wall Street thinks it'll go well: The Gemini 3 precedent.

Gemini 3 was trained entirely on TPUs and immediately became what industry experts describe as the "current state of the art" in AI models. Salesforce CEO Marc Benioff publicly switched from ChatGPT to Gemini 3, calling it superior.

That's not minor. That's validation. If TPUs can train state-of-the-art models, they can serve Meta's workloads.

The Algorithmic Trading Angle: Why This Matters for Trading Platforms

So far, this reads like a chip industry story. But for anyone building algorithmic trading systems—especially robo-advisories and algo trading platforms—this is where the story becomes directly relevant.

Infrastructure Costs Drive Platform Economics

Building an algorithmic trading platform costs between $250,000 and $500,000 in startup infrastructure and R&D, depending on complexity. Of that:

- $100,000 - $300,000 for computing hardware and cloud costs

- $75,000 - $250,000 for algorithm development (including ML/AI optimization)

- $30,000 - $100,000 for machine learning tools and frameworks

As infrastructure costs drop due to chip competition, so do platform development costs. A 35-40% reduction in TPU costs vs. Nvidia GPUs translates to:

$35,000 - $120,000 in savings per platform deployment.

For mean-reversion and pivot-based strategies, which require:

- Continuous model retraining on historical OHLC data

- Real-time inference for signal generation

- Backtesting across millions of price scenarios

Lower infrastructure costs = more frequent model updates = better strategy performance.

The Competitive Advantage: Speed and Efficiency

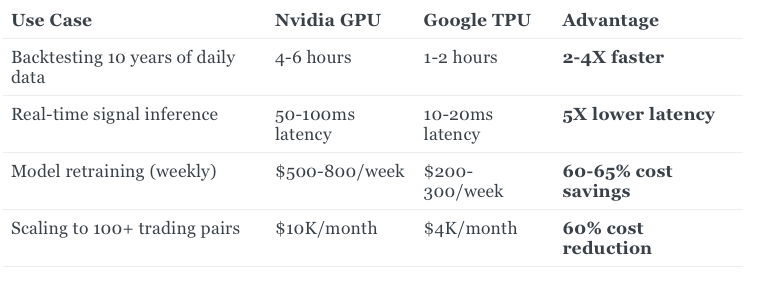

TPUs excel at the exact computational patterns used in algorithmic trading:

- Batch inference at scale (processing thousands of price data points simultaneously)

- Matrix operations (core to technical indicator calculations: Bollinger Bands, RSI, moving averages)

- Time-series prediction (what mean-reversion strategies depend on)

Specific trading advantages from TPU efficiency:

For trading platforms competing on speed and cost efficiency, this is significant.

The Robo-Advisory Economics

This matters even more for robo-advisory platforms. The typical robo-advisory stack requires:

- Constant model retraining (daily/weekly)

- Real-time portfolio optimization

- Risk calculation across thousands of user portfolios

- Performance attribution analysis

If TPUs can deliver this 60-65% cheaper than Nvidia GPUs, robo-advisory margins improve dramatically:

Scenario A (Nvidia GPU):

- Infrastructure cost: $50,000/month

- Robo-advisory fee: 0.5% AUM

- Breakeven AUM: ~$120M

Scenario B (Google TPU):

- Infrastructure cost: $20,000/month

- Robo-advisory fee: 0.5% AUM

- Breakeven AUM: ~$48M

That's a 2.5X improvement in unit economics simply from choosing the right hardware.

What Investors Really Think (The Market Doesn't Lie)

Here's what the $250 billion reallocation signals:

- TPUs are credible. They're not a science project. They're ready for production workloads at scale.

- Customer diversification is real. Even companies with decades of Nvidia history (Meta) are testing alternatives. If it works, others follow.

- Cost matters. For hyperscalers dealing with $70-100B annual capex, a 35-40% cost savings on chips isn't trivial. It's transformational.

- The market isn't zero-sum anymore. Nvidia maintaining 80-85% market share instead of 95% still leaves room for massive growth. The pie is expanding faster than any single player can serve.

Nvidia might "lose" share, but the expanding market means absolute revenue still grows. That's why smart traders are asking: This is a negative for Nvidia, but is it negative enough to justify a sustained 4% decline?

Wall Street's answer: No, not in the long term. But yes, in the near term, as uncertainty reprices the stock.

The Counter-Arguments (And Why They Matter)

Nvidia won't disappear. Here are the legitimate counterpoints:

1. TPUs Only Work in Google Cloud

This is true. You can't run TPUs on-premise. If you need hybrid infrastructure, Nvidia is your answer.

2. Nvidia Runs Everywhere

Nvidia GPUs work across clouds, on-premise systems, edge devices, and local workstations. TPUs are limited to Google Cloud infrastructure. For companies with multi-cloud strategies, that's a real constraint.

3. Framework Flexibility

Nvidia dominates in PyTorch. TPUs are optimized for TensorFlow and JAX. If your team is deeply invested in PyTorch, Nvidia remains the natural choice.

4. We've Seen This Before

Nvidia has survived multiple "threats"—from AMD, from Intel's discrete GPUs, from custom chips. They've adapted each time.

But here's the difference: Meta isn't a startup. It's a Fortune 5 company with $100B+ annual revenue and the engineering talent to make a complex chip transition work. If it commits to TPUs, it's not a temporary experiment. It's a permanent shift.

And if Meta does it, so will others.

What Happens Next?

Q1 2026: Validation Phase

Meta begins renting TPUs. The market watches performance metrics closely. If Meta's models hit quality targets faster and cheaper than with Nvidia chips, that's a public win for Google.

Q3-Q4 2026: Copycat Effect

If Meta's transition works, expect announcements from other hyperscalers. Amazon might accelerate its own custom chip development (Trainium, Inferentia). Microsoft might diversify its Nvidia dependence.

2027: The Real Test

Meta's TPU purchases go live at scale. The data center economics become clear. Nvidia's market share in new infrastructure orders visibly shifts.

2030: The New Normal

The market stabilizes with multiple credible chip suppliers. Nvidia remains dominant but no longer monopolistic. Pricing power shifts. Competition drives innovation.

The Takeaway: This Changes Everything (Eventually)

The $250 billion market reallocation wasn't irrational panic. It was rational repricing of a fundamental shift.

For the next 3-5 years:

- Nvidia remains the default choice for most AI workloads

- TPUs capture increasing share in high-volume, price-sensitive applications

- Competition drives costs down across the board

- Trading platforms and robo-advisories benefit from cheaper infrastructure

For algorithmic traders and fintech founders, the message is clear:

Your AI infrastructure costs are about to drop. The sooner you understand the TPU vs. GPU trade-offs, the sooner you can architect for cost efficiency.

The hardware war just got interesting. And unlike the passive observers in most industries, traders get to profit from the volatility it creates.

Key Metrics to Track

Bookmark these for follow-up research:

Learn More About Algorithmic Trading & AI Infrastructure

Ready to explore how modern trading platforms leverage AI and infrastructure optimization?

Discover Fintrens – an algorithmic trading platform designed for efficient execution and smart infrastructure decisions.

🔗 Visit: www.fintrens.com

At Fintrens, we understand that infrastructure costs directly impact trading platform profitability. Whether you're building algo trading systems or robo-advisory services, the shift toward efficient computing chips matters to your bottom line.