The Creepy Truth Behind Viral AI Saree Photos – What Google Really Knows About You

A young woman uploaded her photo to Google’s AI for a saree makeover — and what she discovered was terrifying. The AI didn’t just drape her in a saree; it also revealed a hidden birthmark that wasn’t visible in the original image.

At first, it looked like harmless fun. But in reality, this viral AI saree trend highlights serious AI privacy risks. According to recent surveys, 63% of consumers worry about how AI tools handle their personal data. And they’re right to be concerned.

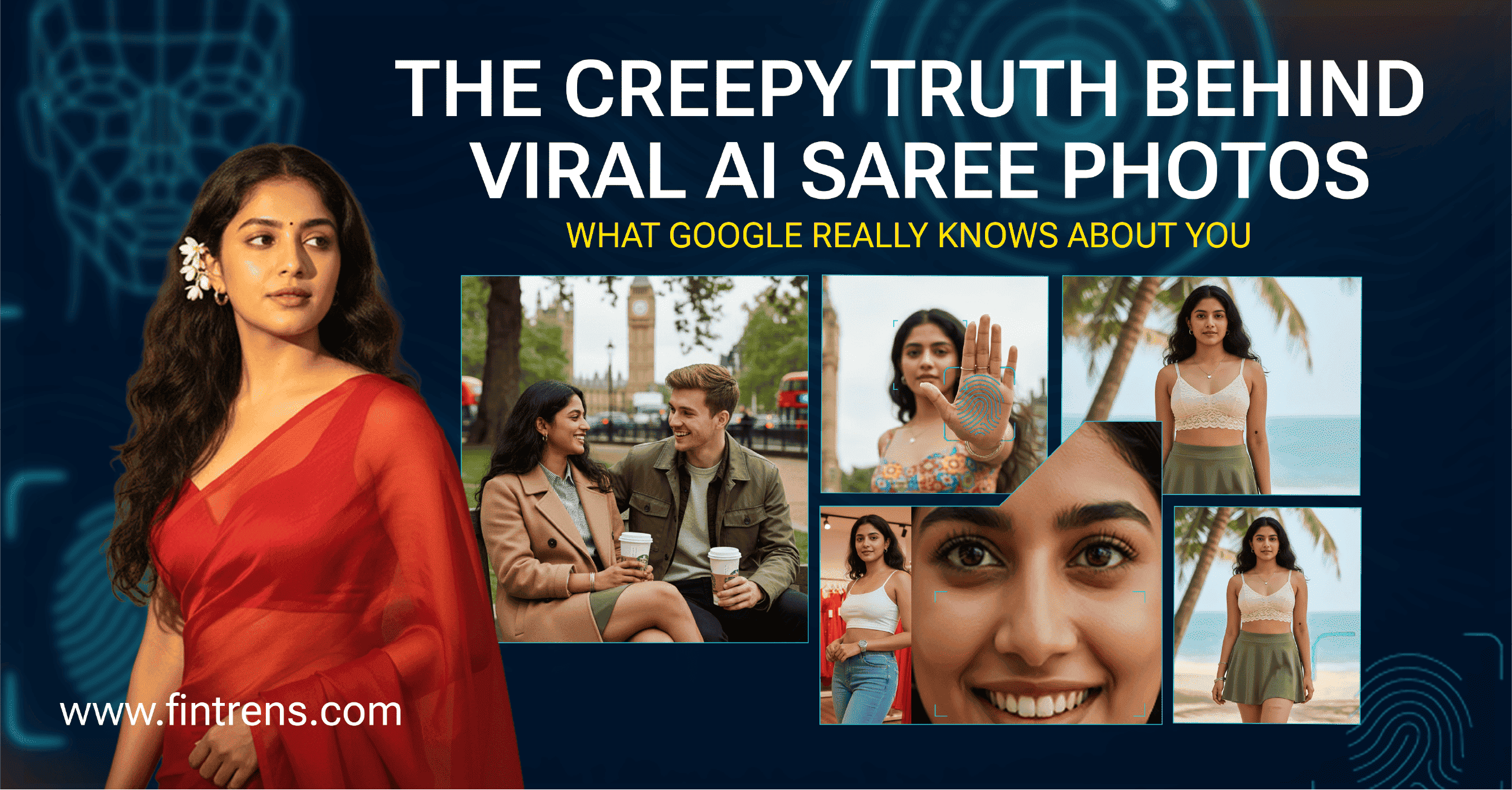

Riya’s Journey: A Story Told Through Photos

Meet Riya, a 22-year-old professional in India who loves sharing her life online. Like millions, she joined the viral AI saree trend.

Step 1: The Saree Makeover

Her simple photo turned glamorous — but also exposed hidden details she never shared. This was not just fashion enhancement. It was AI inference in action, powered by massive datasets and her own digital trail.

Step 2: Everyday Uploads, Hidden Insights

Riya thought her other posts were harmless. But AI saw much more:

- Fashion style, brand preferences.

- Travel patterns, geo-locations.

- Social circle, possible tagging data.

- Iris pattern, skin health, stress levels.

From just these pictures, AI could already build her digital identity:

- Biometric identifiers (birthmark, scar, iris).

- Lifestyle choices (coffee, cosmetics, clothes).

- Financial potential (luxury brands, holidays).

- Social graph (friends, tagging, common locations).

Step 3: The Hidden Dangers Behind AI Saree Photos

- Deepfakes & Identity Misuse

AI saree photos can be morphed into fake or compromising images. - Biometric Data Exposure

Every selfie reveals iris patterns, scars, or gait. These are permanent identifiers that can’t be changed. - Cross-Platform Tracking

Your Instagram selfies, WhatsApp DP, and LinkedIn headshot can be stitched together — even matched against CCTV footage. - Background Exploits

AI sharpens details humans miss. Reflections in sunglasses can reveal cards, documents, or faces behind you. - Photo Library Insights

- Google Photos suggesting “duplicates” or “similar photos” is not magic — it’s AI scanning your gallery.

- Automatic facial tagging means your entire contact list can be mapped.

- Remember: once permissions are granted, Google Photos has full access to your library.

- Behaviour Manipulation

If AI reads stress in your face, you may suddenly get ads for alcohol, pills, or speculative trading apps. - Child Privacy Concerns

Kids’ photos uploaded today may build AI-driven profiles that follow them for life. - Data Resale Risks

Not all apps keep your data safe. Many resell it to brokers, insurers, or political groups. - AI Misinterpretation

A scar might be wrongly flagged as a “disease” — affecting insurance or job opportunities.

Step 4: Safer Photo Habits (Before & After Examples)

Riya realised she could still enjoy photos but in safer ways.

- 🕶️ Limit eye exposure → Sunglasses reduce iris data.

- 👗 Wear full outfits → Less skin = less biometric scanning.

- 🏡 Use clean backgrounds → A plain wall is safer than cafés with receipts, QR codes, or laptops.

- 🚶 Avoid landmark-heavy shots → Public places reveal your location.

- 📉 Post less, protect more → The fewer uploads, the weaker the AI puzzle.

This way, Riya’s journey teaches us how everyday photo habits either expose or protect us.

✅ Privacy Protection Checklist

Before you upload that next photo, ask yourself:

- 🔒 Do I really need to share this image with an AI tool?

- 📴 Can I strip metadata (location, time, device info) before uploading?

- 📂 Am I keeping sensitive files offline instead of cloud drives?

- 📱 Have I reviewed app permissions for camera, mic, and GPS?

- 👨👩👧👦 Am I avoiding unnecessary uploads of children’s photos?

Why This Matters

To us, photos are memories. To AI, they’re data mines. Add in smart devices like Alexa, Siri, and smartwatches — and the map of your life becomes frighteningly detailed.

This is what experts call Digital Privacy 2024: a world where every photo upload is a piece of your identity puzzle.

Fintrens: Privacy First in Trading

At Fintrens, we put privacy at the centre of everything. Our trading bot Firefly only connects with your broker to execute trades. We never collect or store your KYC, bank details, or personal identifiers.

Your broker manages compliance. Firefly handles only your trades. Unlike other AI tools that might exploit your data, Firefly is built to protect it.

Final Thoughts

The viral AI saree trend isn’t just about fashion. It’s about your identity. Every uploaded photo is a clue, and AI is piecing them together into a complete profile.

👉 Stay aware. 👉 Stay cautious. 👉 Stay in control.

🔹 Extended Disclaimer

While this article highlights photos as examples, privacy risks are not limited to images. AI systems also use location, voice recordings, browsing activity, and IoT signals to build profiles.

This article mentions Google because of the viral AI saree trend, but the reality is that all AI systems — Google, Meta, Amazon, OpenAI, and more — rely on millions of data points to operate accurately.

The issue is not one company or one feature. The issue is how much we share without realising.

🔗 Learn more: www.fintrens.com

📘 Docs: docs.firefly.fintrens.com

💬 Join WhatsApp: Fintrens Channel

🌐 Join us: www.fintrens.com/join